Auditing and Reviewing Amazon EKS Workloads

Auditing and reviewing workload access deployed in Kubernetes clusters is critical for achieving compliance. Cloud native observability — a technique to capture events, logs, metrics, and traces — provides insights but doesn’t associate or map the activity to Kubernetes users.

Teleport’s auditing capabilities deliver unprecedented visibility into infrastructure access and behavior that exceed the compliance objectives of organizations. It provides insights into the events, commands run during the session, and even the ability to playback the recorded videos of an entire SSH session run within a Kubernetes pod deployed in Amazon EKS.

Teleport Audit, one of the key pillars of the platform, delivers the following capabilities:

- Unified Resource Catalog - A dynamic list of infrastructure resources including servers, Kubernetes clusters, databases, applications and more

- Audit Log - Acts as a single source of truth for all the security events generated by Teleport

- Live Session View - A dynamic list of live sessions across all protocols and environments

- Kubectl Exec Session Recording - An interactive mechanism to playback sessions recorded and stored in a centralized location

Part 4: Auditing Amazon EKS access

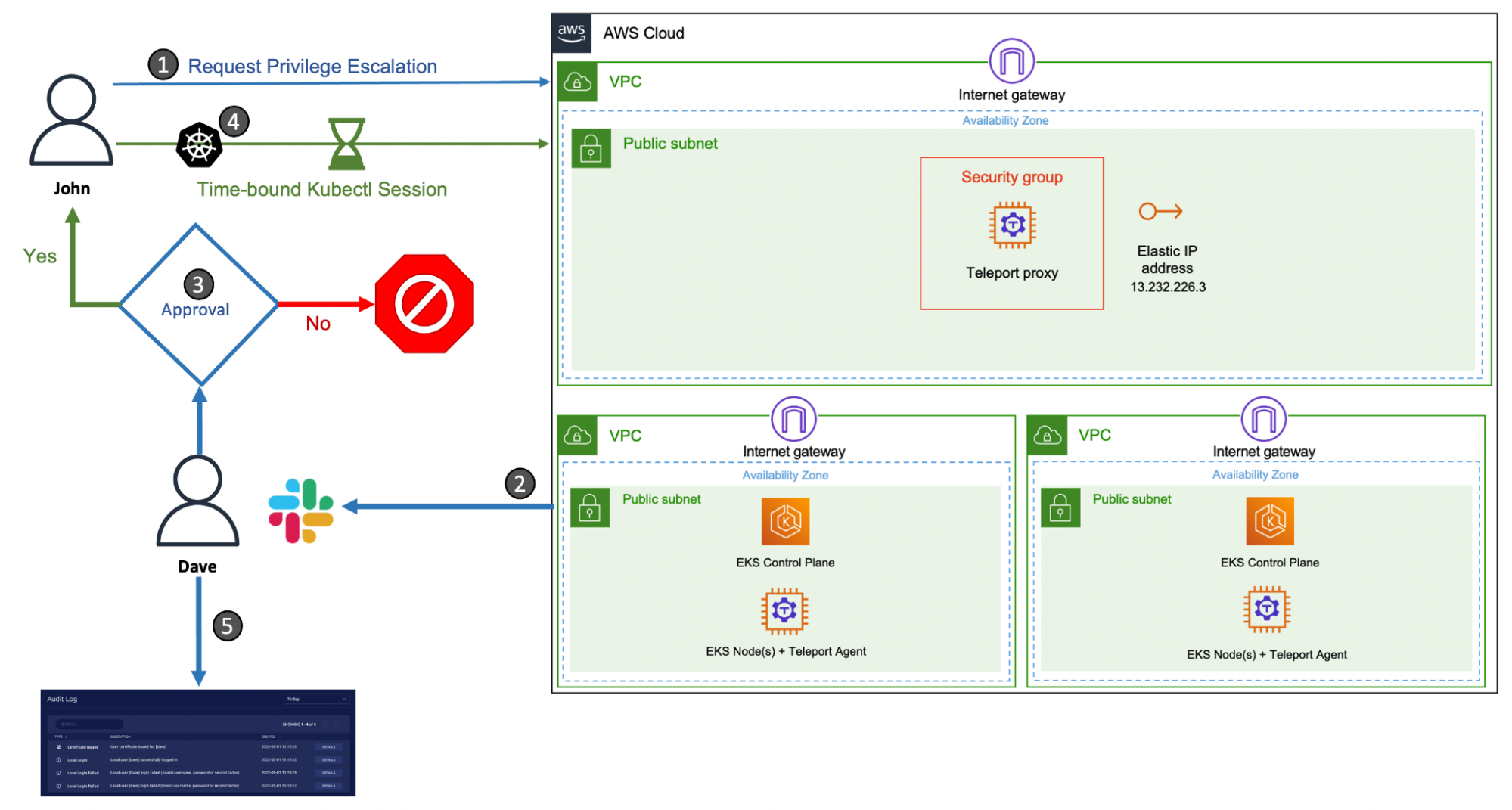

In the previous tutorial, we demonstrated how to perform privilege escalation with Teleport RBAC and Slack to provide time-bound access to production clusters. A contractor requests a time-bound kubectl session to access a production cluster, approved by a team leader.

We will extend the scenario to cover the audit and review of the cluster and workload access initiated by the contractor. We will also walk through the steps to use Amazon DynamoDB storage for persisting Teleport events within the AWS infrastructure.

| Teleport User | Persona | Teleport Roles |

| Dave | Team Leader | access,auditor,editor,team-lead |

| John | Contractor | access,contractor |

| John | EKS Admin* | access,eks-admin |

Tip: The auditor role is a preset role that allows reading cluster events, audit logs and playing back session records. The team leader persona below assumes the role of an auditor since he needs to access the session recordings and audit logs.

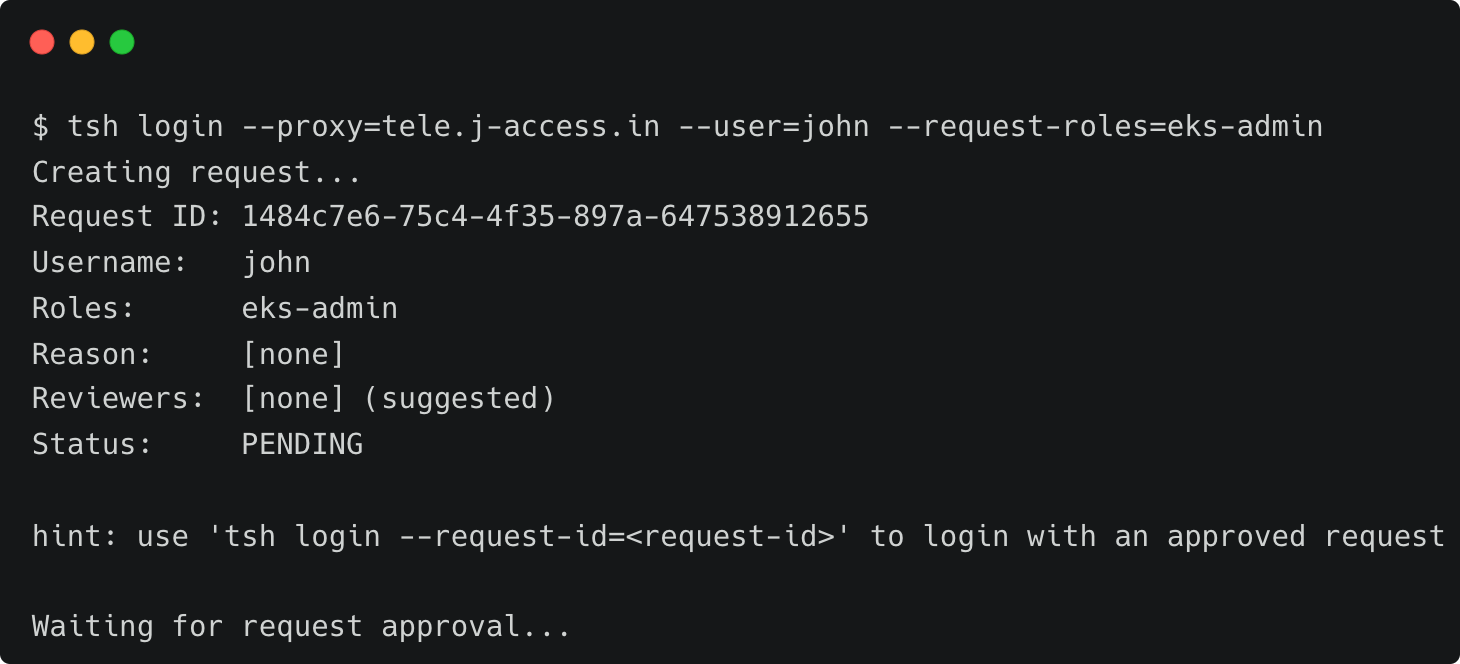

Step 1 - Requesting privilege escalation for a production cluster

Start by requesting access to the Amazon RKS production cluster for John with elevated privileges and letting Dave approve it.

tsh login --proxy=tele.j-access.in --user=john --request-roles=eks-admin

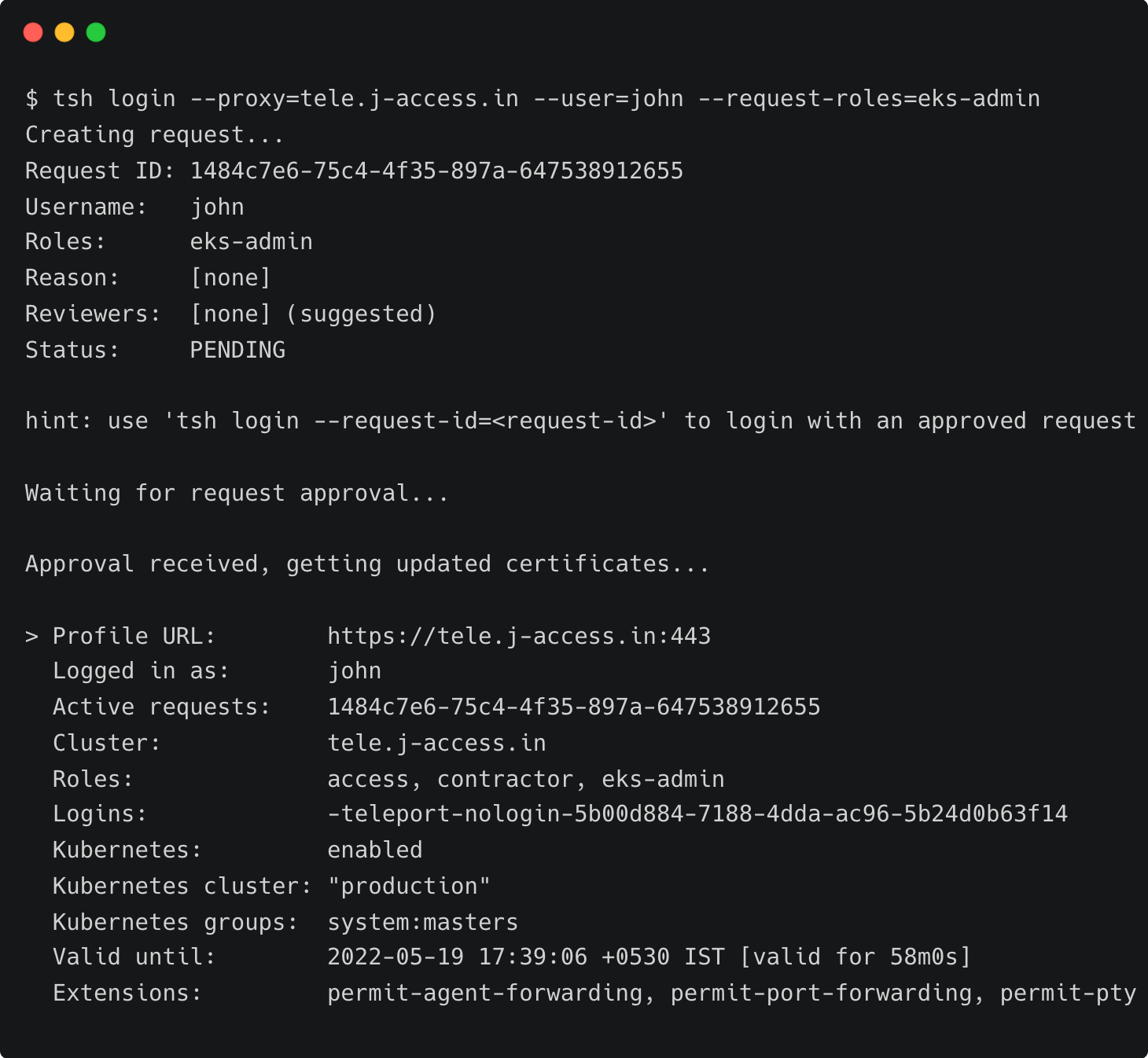

Once Dave approves the request, John assumes the role of an EKS administrator for 60 minutes.

Step 2 - Accessing containerized workloads running in Amazon EKS

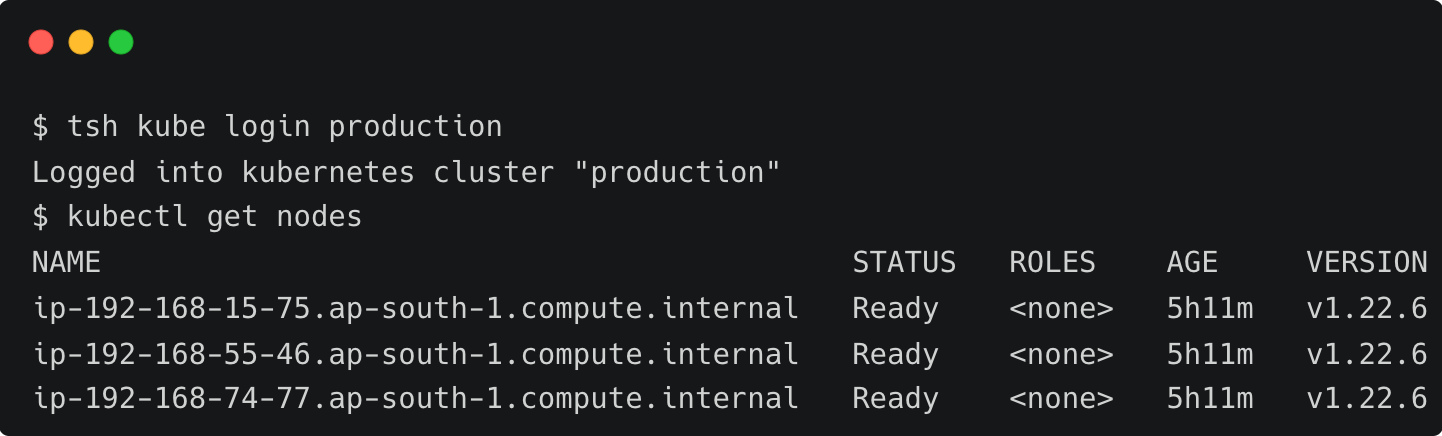

After John logs into the production Kubernetes cluster, he performs a few tasks.

Since the eks-admin role is mapped to the Kubernetes system:masters group, John can perform any task including running privileged pods. John deploys a pod in privileged mode, taking advantage of his short-lived privilege.

apiVersion: v1

kind: Pod

metadata:

name: escape-to-host

labels:

app: escape-to-host

spec:

hostPID: true

containers:

- name: escape-to-host

image: ubuntu

tty: true

securityContext:

privileged: true

command: [ "nsenter", "--target", "1", "--mount", "--uts", "--ipc", "--net", "--pid", "--", "bash" ]The above YAML specification provides direct access to the host shell by escaping the pod. It gives direct root access to the host OS.

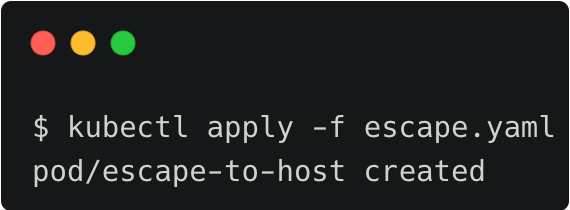

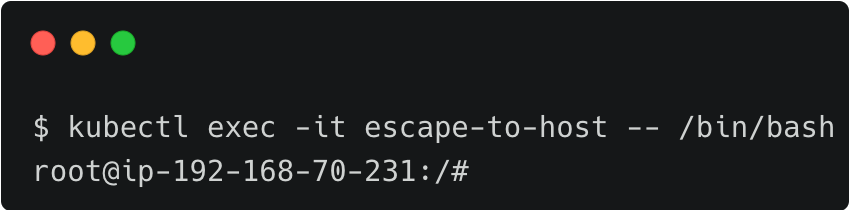

John deploys the pod into the default namespace and then executes the bash command within the container, which drops him straight into the host shell.

kubectl apply -f escape.yaml

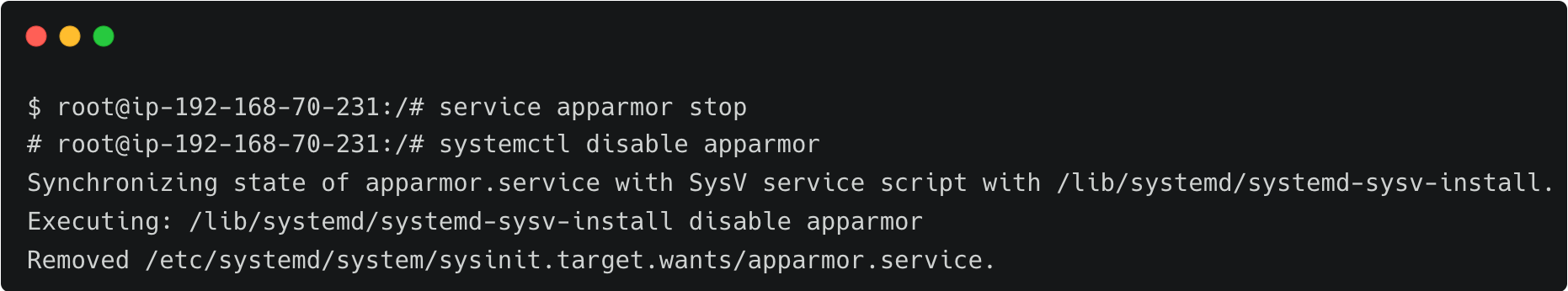

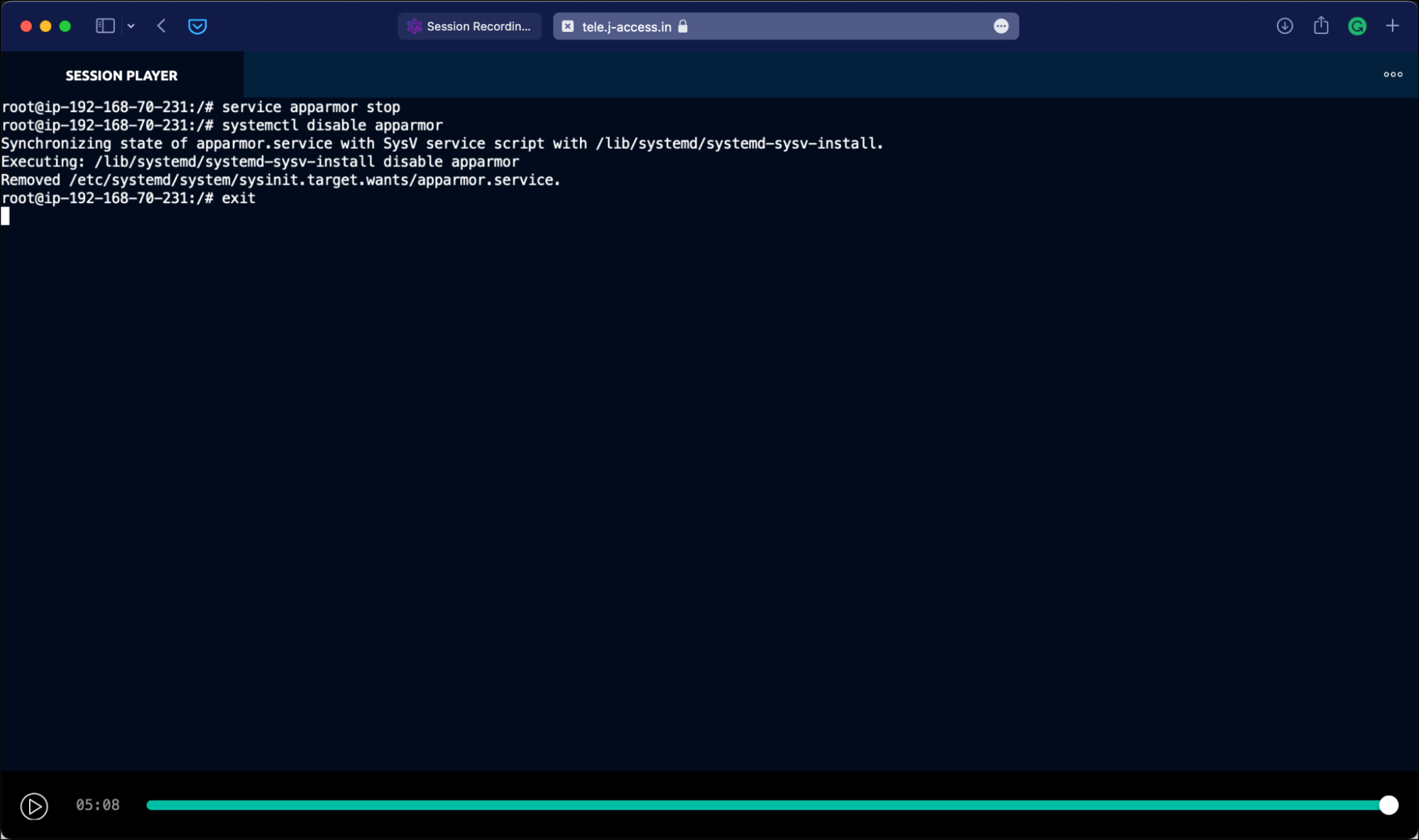

Gaining the root access to the host, John deliberately disables the Apparmor Linux security module, designed to implement mandatory access control (MAC) policies. This step, which is not expected to be performed on production servers, leads to a security risk.

After a few minutes, John ends his session, and eventually, his privileges as expire because of the RBAC policy.

After a couple of days, an automated script reports that the mandatory Apparmor module is missing during the regular maintenance check in one of the production cluster nodes.

Dave now wants to access the recording of the last kubectl session initiated by John to identify the problem.

He can log in to the Web UI with credentials and accesses the session recordings available under the Activity tab of the left navigation bar.

The first session, named production/default/escape-to-host points to the most recent session used by John.

Dave now plays back the entire session and notices that Apparmor module was disabled by John.

Dave can also play the session video within the terminal. For this, he copies the session ID from the web UI and runs the below command:

tsh play 6803b48a-21ec-4592-946a-6d683731c1e7It’s also possible to print the session events in JSON to the terminal by adding the –format=json switch.

tsh play –format=json 6803b48a-21ec-4592-946a-6d683731c1e7

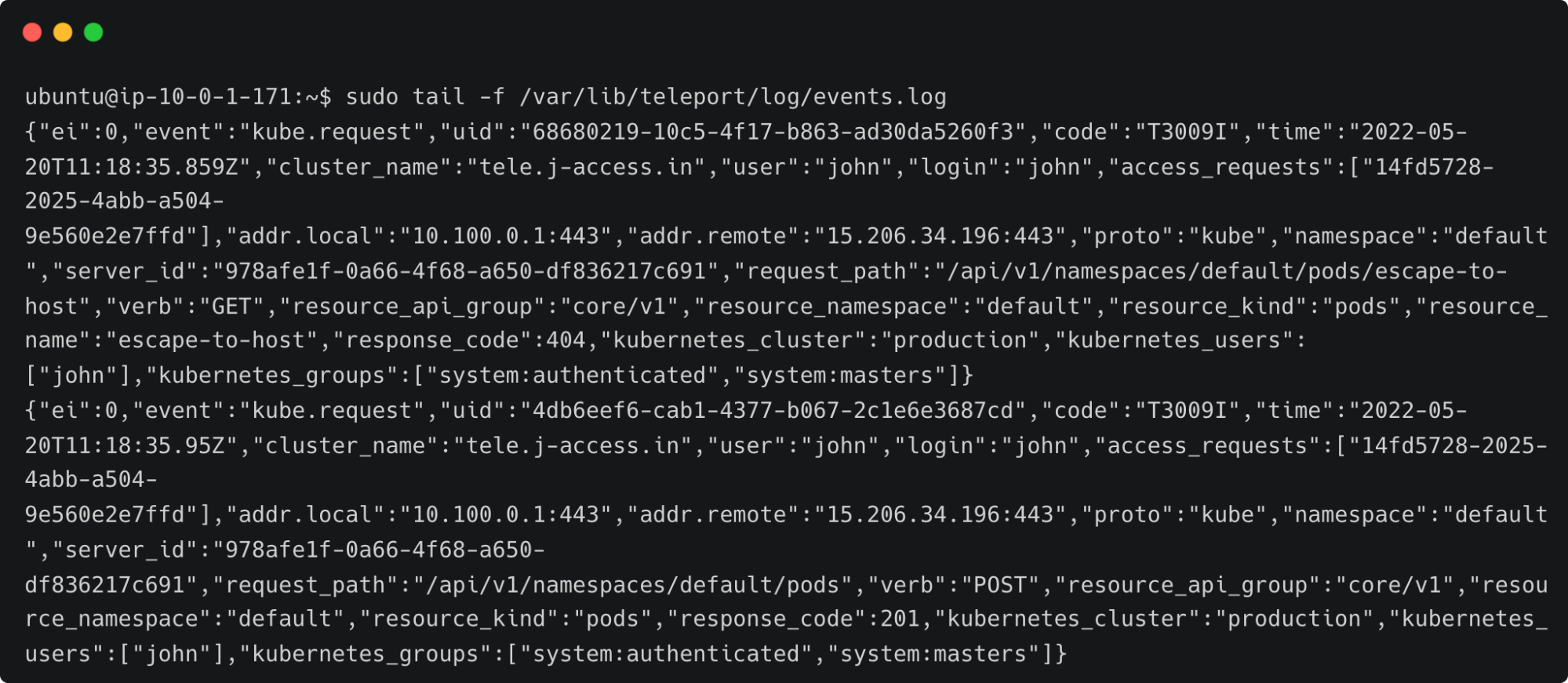

Apart from the session recordings, Teleport also maintains a detailed event log on the auth server. If you have access to the Teleport auth server, SSH into it to access the event log.

sudo tail -f /var/lib/teleport/log/events.logFor example, the below events are generated and logged when John logs into Teleport:

By default, Teleport stores the event logs and session recordings in /var/lib/teleport/log directory of the auth server. It is possible to move them to the AWS cloud for high availability and durability. The event logs can be stored in Amazon DynamoDB while storing the session recordings in Amazon S3 buckets. Please refer to the second step of the tutorial, Amazon EC2 SSH Session Recording and Auditing with Teleport.

In this guided walkthrough, we explored Teleport audit concepts for reviewing Kubernetes sessions and the ability to move the event logs and session recordings to the AWS cloud. First, we created roles based on the preset and custom role definitions that provided access to the event logs and session recordings.

As explained in the EC2 series, you can also extend the configuration to store the logs and recordings in Amazon DynamoDB and Amazon S3, which makes the data highly available and durable.