Database Access with ClickHouse

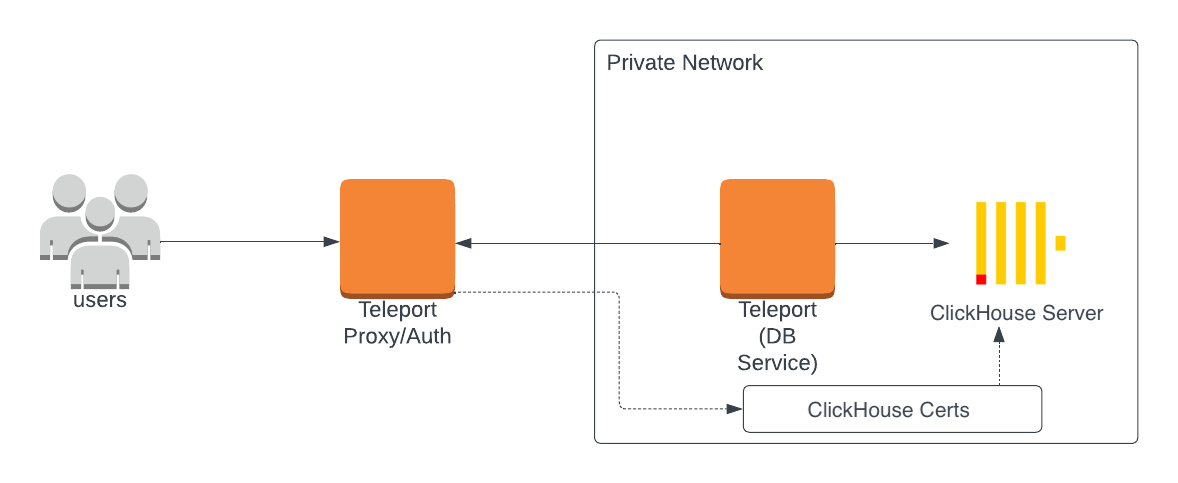

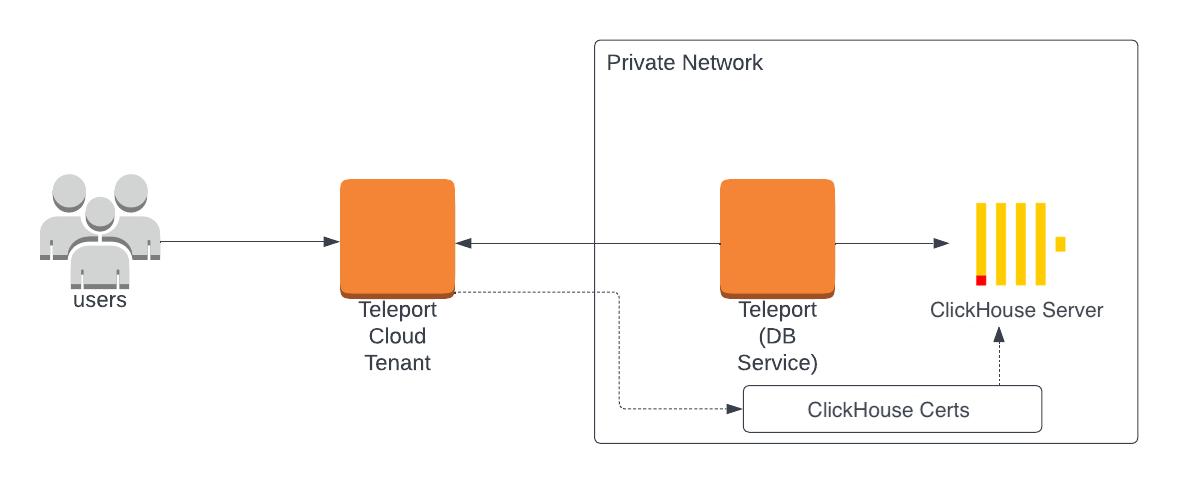

The Teleport Clickhouse integration allows you to enroll ClickHouse databases with Teleport.

The Teleport Database Service authenticates to ClickHouse using x509 certificates, which are available for the ClickHouse HTTP and Native (TCP) interfaces. The Teleport Database Service can communicate in both the ClickHouse Native (TCP) and HTTP protocols, and you can select which protocol to use when configuring the Teleport Database Service.

Teleport audit logs for query activity are only supported for the ClickHouse HTTP interface. Teleport support for ClickHouse's native interfaces does not include audit logs for database query activity.

This guide will help you to:

- Install and configure a Teleport database agent.

- Set up Teleport to access your self-hosted ClickHouse database.

- Connect to your database through Teleport.

- Self-Hosted

- Teleport Cloud

Prerequisites

-

A running Teleport cluster version 14.3.33 or above. If you want to get started with Teleport, sign up for a free trial or set up a demo environment.

-

The

tctladmin tool andtshclient tool.Visit Installation for instructions on downloading

tctlandtsh.

- Either a Linux host or Kubernetes cluster where you will run the Teleport Database Service.

You will also need the following, depending on the ClickHouse protocol you choose:

- HTTP

- Native (TCP)

- A self-hosted deployment of ClickHouse Server v22.3 or later.

- A self-hosted deployment of ClickHouse Server v23.3 or later.

- The clickhouse-client installed and added to your user's

PATHenvironment variable.

Step 1/5. Create a Teleport token and user

The Database Service requires a valid join token to join your Teleport cluster.

Run the following tctl command and save the token output in /tmp/token

on the server that will run the Database Service:

$ tctl tokens add --type=db --format=text

abcd123-insecure-do-not-use-this

To modify an existing user to provide access to the Database Service, see Database Access Access Controls

- Teleport Community Edition

- Teleport Enterprise/Enterprise Cloud

Create a local Teleport user with the built-in access role:

$ tctl users add \

--roles=access \

--db-users="*" \

--db-names="*" \

alice

Create a local Teleport user with the built-in access and requester roles:

$ tctl users add \

--roles=access,requester \

--db-users="*" \

--db-names="*" \

alice

| Flag | Description |

|---|---|

--roles | List of roles to assign to the user. The builtin access role allows them to connect to any database server registered with Teleport. |

--db-users | List of database usernames the user will be allowed to use when connecting to the databases. A wildcard allows any user. |

--db-names | List of logical databases (aka schemas) the user will be allowed to connect to within a database server. A wildcard allows any database. |

Database names are only enforced for PostgreSQL and MongoDB databases.

For more detailed information about database access controls and how to restrict access see RBAC documentation.

Step 2/5. Create a certificate/key pair

Teleport uses mutual TLS authentication with self-hosted databases. These databases must be configured with Teleport's certificate authority to be able to verify client certificates. They also need a certificate/key pair that Teleport can verify.

If you are using Teleport Cloud, your Teleport user must be allowed to

impersonate the system role Db in order to be able to generate the database

certificate.

Include the following allow rule in in your Teleport Cloud user's role:

allow:

impersonate:

users: ["Db"]

roles: ["Db"]

Export Teleport's certificate authority and a generated certificate/key pair for host db.example.com with a 1-year validity period:

$ tctl auth sign --format=db --host=clickhouse.example.com --out=server --ttl=8766h

We recommend using a shorter TTL, but keep mind that you'll need to update the database server certificate before it expires to not lose the ability to connect. Pick the TTL value that best fits your use-case.

This command will create three files:

server.cas: Teleport's certificate authorityserver.key: a generated private keyserver.crt: a generated host certificate

Step 3/5. Configure ClickHouse

Use the generated secrets to enable mutual TLS in your clickhouse-server/config.xml configuration file:

<openSSL>

<server>

<privateKeyFile>/path/to/server.key</privateKeyFile>

<caConfig>/path/to/server.cas</caConfig>

<certificateFile>/path/to/server.crt</certificateFile>

<verificationMode>strict</verificationMode>

</server>

</openSSL>

Additionally, your ClickHouse database user accounts must be configured to require a valid client certificate:

CREATE USER alice IDENTIFIED WITH ssl_certificate CN 'alice';

By default, the created user may not have access to anything and won't be able to connect, so let's grant it some permissions:

GRANT ALL ON *.* TO alice;

Step 4/5. Configure and start the Database Service

Install and configure Teleport on the host or Kubernetes cluster where you will run the Teleport Database Service:

- Linux Server

- Kubernetes Cluster

Select an edition, then follow the instructions for that edition to install Teleport.

- Teleport Community Edition

- Teleport Enterprise

- Teleport Enterprise Cloud

The following command updates the repository for the package manager on the local operating system and installs the provided Teleport version:

$ curl https://cdn.teleport.dev/install-v14.3.33.sh | bash -s 14.3.33

- Debian 9+/Ubuntu 16.04+ (apt)

- Amazon Linux 2/RHEL 7 (yum)

- Amazon Linux 2/RHEL 7 (zypper)

- Amazon Linux 2023/RHEL 8+ (dnf)

- SLES 12 SP5+ and 15 SP5+ (zypper)

- Tarball

# Download Teleport's PGP public key

$ sudo curl https://apt.releases.teleport.dev/gpg \

-o /usr/share/keyrings/teleport-archive-keyring.asc

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport APT repository for v14. You'll need to update this

# file for each major release of Teleport.

$ echo "deb [signed-by=/usr/share/keyrings/teleport-archive-keyring.asc] \

https://apt.releases.teleport.dev/${ID?} ${VERSION_CODENAME?} stable/v14" \

| sudo tee /etc/apt/sources.list.d/teleport.list > /dev/null

$ sudo apt-get update

$ sudo apt-get install teleport-ent

For FedRAMP/FIPS-compliant installations, install the teleport-ent-fips package instead:

$ sudo apt-get install teleport-ent-fips

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport YUM repository for v14. You'll need to update this

# file for each major release of Teleport.

# First, get the major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

$ sudo yum install -y yum-utils

$ sudo yum-config-manager --add-repo "$(rpm --eval "https://yum.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/v14/teleport.repo")"

$ sudo yum install teleport-ent

#

# Tip: Add /usr/local/bin to path used by sudo (so 'sudo tctl users add' will work as per the docs)

# echo "Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin:/usr/local/bin" > /etc/sudoers.d/secure_path

For FedRAMP/FIPS-compliant installations, install the teleport-ent-fips package instead:

$ sudo yum install teleport-ent-fips

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport Zypper repository for v14. You'll need to update this

# file for each major release of Teleport.

# First, get the OS major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

# Use zypper to add the teleport RPM repo

$ sudo zypper addrepo --refresh --repo $(rpm --eval "https://zypper.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/cloud/teleport-zypper.repo")

$ sudo yum install teleport-ent

#

# Tip: Add /usr/local/bin to path used by sudo (so 'sudo tctl users add' will work as per the docs)

# echo "Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin:/usr/local/bin" > /etc/sudoers.d/secure_path

For FedRAMP/FIPS-compliant installations, install the teleport-ent-fips package instead:

$ sudo yum install teleport-ent-fips

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport YUM repository for v14. You'll need to update this

# file for each major release of Teleport.

# First, get the major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

# Use the dnf config manager plugin to add the teleport RPM repo

$ sudo dnf config-manager --add-repo "$(rpm --eval "https://yum.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/v14/teleport.repo")"

# Install teleport

$ sudo dnf install teleport-ent

# Tip: Add /usr/local/bin to path used by sudo (so 'sudo tctl users add' will work as per the docs)

# echo "Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin:/usr/local/bin" > /etc/sudoers.d/secure_path

For FedRAMP/FIPS-compliant installations, install the teleport-ent-fips package instead:

$ sudo dnf install teleport-ent-fips

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport Zypper repository.

# First, get the OS major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

# Use Zypper to add the teleport RPM repo

$ sudo zypper addrepo --refresh --repo $(rpm --eval "https://zypper.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/v14/teleport-zypper.repo")

# Install teleport

$ sudo zypper install teleport-ent

For FedRAMP/FIPS-compliant installations, install the teleport-ent-fips package instead:

$ sudo zypper install teleport-ent-fips

In the example commands below, update $SYSTEM_ARCH with the appropriate

value (amd64, arm64, or arm). All example commands using this variable

will update after one is filled out.

$ curl https://cdn.teleport.dev/teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-bin.tar.gz.sha256

# <checksum> <filename>

$ curl -O https://cdn.teleport.dev/teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-bin.tar.gz

$ shasum -a 256 teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-bin.tar.gz

# Verify that the checksums match

$ tar -xvf teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-bin.tar.gz

$ cd teleport-ent

$ sudo ./install

For FedRAMP/FIPS-compliant installations of Teleport Enterprise, package URLs will be slightly different:

$ curl https://cdn.teleport.dev/teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-fips-bin.tar.gz.sha256

# <checksum> <filename>

$ curl -O https://cdn.teleport.dev/teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-fips-bin.tar.gz

$ shasum -a 256 teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-fips-bin.tar.gz

# Verify that the checksums match

$ tar -xvf teleport-ent-v14.3.33-linux-$SYSTEM_ARCH-fips-bin.tar.gz

$ cd teleport-ent

$ sudo ./install

OS repository channels

The following channels are available for APT, YUM, and Zypper repos. They may be used in place of

stable/v14 anywhere in the Teleport documentation.

| Channel name | Description |

|---|---|

stable/<major> | Receives releases for the specified major release line, i.e. v14 |

stable/cloud | Rolling channel that receives releases compatible with current Cloud version |

stable/rolling | Rolling channel that receives all published Teleport releases |

- Debian 9+/Ubuntu 16.04+ (apt)

- Amazon Linux 2/RHEL 7/CentOS 7 (yum)

- Amazon Linux 2023/RHEL 8+ (dnf)

- SLES 12 SP5+ and 15 SP5+ (zypper)

Add the Teleport repository to your repository list:

# Download Teleport's PGP public key

$ sudo curl https://apt.releases.teleport.dev/gpg \

-o /usr/share/keyrings/teleport-archive-keyring.asc

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport APT repository for cloud.

$ echo "deb [signed-by=/usr/share/keyrings/teleport-archive-keyring.asc] \

https://apt.releases.teleport.dev/${ID?} ${VERSION_CODENAME?} stable/cloud" \

| sudo tee /etc/apt/sources.list.d/teleport.list > /dev/null

# Provide your Teleport domain to query the latest compatible Teleport version

$ export TELEPORT_DOMAIN=example.teleport.com

$ export TELEPORT_VERSION="$(curl https://$TELEPORT_DOMAIN/v1/webapi/automaticupgrades/channel/default/version | sed 's/v//')"

# Update the repo and install Teleport and the Teleport updater

$ sudo apt-get update

$ sudo apt-get install "teleport-ent=$TELEPORT_VERSION" teleport-ent-updater

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport YUM repository for cloud.

# First, get the OS major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

$ sudo yum install -y yum-utils

$ sudo yum-config-manager --add-repo "$(rpm --eval "https://yum.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/cloud/teleport-yum.repo")"

# Provide your Teleport domain to query the latest compatible Teleport version

$ export TELEPORT_DOMAIN=example.teleport.com

$ export TELEPORT_VERSION="$(curl https://$TELEPORT_DOMAIN/v1/webapi/automaticupgrades/channel/default/version | sed 's/v//')"

# Install Teleport and the Teleport updater

$ sudo yum install "teleport-ent-$TELEPORT_VERSION" teleport-ent-updater

# Tip: Add /usr/local/bin to path used by sudo (so 'sudo tctl users add' will work as per the docs)

# echo "Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin:/usr/local/bin" > /etc/sudoers.d/secure_path

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport YUM repository for cloud.

# First, get the OS major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

# Use the dnf config manager plugin to add the teleport RPM repo

$ sudo dnf config-manager --add-repo "$(rpm --eval "https://yum.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/cloud/teleport-yum.repo")"

# Provide your Teleport domain to query the latest compatible Teleport version

$ export TELEPORT_DOMAIN=example.teleport.com

$ export TELEPORT_VERSION="$(curl https://$TELEPORT_DOMAIN/v1/webapi/automaticupgrades/channel/default/version | sed 's/v//')"

# Install Teleport and the Teleport updater

$ sudo dnf install "teleport-ent-$TELEPORT_VERSION" teleport-ent-updater

# Tip: Add /usr/local/bin to path used by sudo (so 'sudo tctl users add' will work as per the docs)

# echo "Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin:/usr/local/bin" > /etc/sudoers.d/secure_path

# Source variables about OS version

$ source /etc/os-release

# Add the Teleport Zypper repository for cloud.

# First, get the OS major version from $VERSION_ID so this fetches the correct

# package version.

$ VERSION_ID=$(echo $VERSION_ID | grep -Eo "^[0-9]+")

# Use Zypper to add the teleport RPM repo

$ sudo zypper addrepo --refresh --repo $(rpm --eval "https://zypper.releases.teleport.dev/$ID/$VERSION_ID/Teleport/%{_arch}/stable/cloud/teleport-zypper.repo")

# Provide your Teleport domain to query the latest compatible Teleport version

$ export TELEPORT_DOMAIN=example.teleport.com

$ export TELEPORT_VERSION="$(curl https://$TELEPORT_DOMAIN/v1/webapi/automaticupgrades/channel/default/version | sed 's/v//')"

# Install Teleport and the Teleport updater

$ sudo zypper install "teleport-ent-$TELEPORT_VERSION" teleport-ent-updater

OS repository channels

The following channels are available for APT, YUM, and Zypper repos. They may be used in place of

stable/v14 anywhere in the Teleport documentation.

| Channel name | Description |

|---|---|

stable/<major> | Receives releases for the specified major release line, i.e. v14 |

stable/cloud | Rolling channel that receives releases compatible with current Cloud version |

stable/rolling | Rolling channel that receives all published Teleport releases |

Is my Teleport instance compatible with Teleport Enterprise Cloud?

Before installing a teleport binary with a version besides v16,

read our compatibility rules to ensure that the binary is compatible with

Teleport Enterprise Cloud.

Teleport uses Semantic Versioning. Version numbers

include a major version, minor version, and patch version, separated by dots.

When running multiple teleport binaries within a cluster, the following rules

apply:

- Patch and minor versions are always compatible, for example, any 8.0.1 component will work with any 8.0.3 component and any 8.1.0 component will work with any 8.3.0 component.

- Servers support clients that are one major version behind, but do not support

clients that are on a newer major version. For example, an 8.x.x Proxy Service

instance is compatible with 7.x.x agents and 7.x.x

tsh, but we don't guarantee that a 9.x.x agent will work with an 8.x.x Proxy Service instance. This also means you must not attempt to upgrade from 6.x.x straight to 8.x.x. You must upgrade to 7.x.x first. - Proxy Service instances and agents do not support Auth Service instances that

are on an older major version, and will fail to connect to older Auth Service

instances by default. You can override version checks by passing

--skip-version-checkwhen starting agents and Proxy Service instances.

The step below will overwrite an existing configuration file, so if

you're running multiple services add --output=stdout to print the config in

your terminal, and manually adjust /etc/teleport.yaml.

On the host where you will run the Teleport Database Service, start Teleport with the appropriate configuration.

Generate a configuration file at /etc/teleport.yaml for the Database Service:

- HTTP

- Native (TCP)

$ teleport db configure create \

-o file \

--token=/tmp/token \

--proxy=teleport.example.com:443 \

--name=example-clickhouse \

--protocol=clickhouse-http \

--uri=clickhouse.example.com:8443 \

--labels=env=dev

$ teleport db configure create \

-o file \

--token=/tmp/token \

--proxy=teleport.example.com:443 \

--name=example-clickhouse \

--protocol=clickhouse \

--uri=clickhouse.example.com:9440 \

--labels=env=dev

Configure the Teleport Database Service to start automatically when the host boots up by creating a systemd service for it. The instructions depend on how you installed the Teleport Database Service.

- Package Manager

- TAR Archive

On the host where you will run the Teleport Database Service, enable and start Teleport:

$ sudo systemctl enable teleport

$ sudo systemctl start teleport

On the host where you will run the Teleport Database Service, create a systemd service configuration for Teleport, enable the Teleport service, and start Teleport:

$ sudo teleport install systemd -o /etc/systemd/system/teleport.service

$ sudo systemctl enable teleport

$ sudo systemctl start teleport

You can check the status of the Teleport Database Service with systemctl status teleport

and view its logs with journalctl -fu teleport.

Teleport provides Helm charts for installing the Teleport Database Service in Kubernetes Clusters.

Set up the Teleport Helm repository.

Allow Helm to install charts that are hosted in the Teleport Helm repository:

$ helm repo add teleport https://charts.releases.teleport.dev

Update the cache of charts from the remote repository so you can upgrade to all available releases:

$ helm repo update

Install the Teleport Kube Agent into your Kubernetes Cluster with the Teleport Database Service configuration.

- HTTP

- Native (TCP)

$ JOIN_TOKEN=$(cat /tmp/token)

$ helm install teleport-kube-agent teleport/teleport-kube-agent \

--create-namespace \

--namespace teleport-agent \

--set roles=db \

--set proxyAddr=teleport.example.com:443 \

--set authToken=${JOIN_TOKEN?} \

--set "databases[0].name=example-clickhouse" \

--set "databases[0].uri=clickhouse.example.com:8443" \

--set "databases[0].protocol=clickhouse-http" \

--set "databases[0].static_labels.env=dev" \

--version 14.3.33

$ JOIN_TOKEN=$(cat /tmp/token)

$ helm install teleport-kube-agent teleport/teleport-kube-agent \

--create-namespace \

--namespace teleport-agent \

--set roles=db \

--set proxyAddr=teleport.example.com:443 \

--set authToken=${JOIN_TOKEN?} \

--set "databases[0].name=example-clickhouse" \

--set "databases[0].uri=clickhouse.example.com:9440" \

--set "databases[0].protocol=clickhouse" \

--set "databases[0].static_labels.env=dev" \

--version 14.3.33

A single Teleport process can run multiple services, for example multiple Database Service instances as well as other services such the SSH Service or Application Service.

Step 5/5. Connect

Once the Database Service has joined the cluster, log in to see the available databases:

- HTTP

- Native (TCP)

Log in to Teleport and list the databases you can connect to. You should see the ClickHouse database you enrolled earlier:

$ tsh login --proxy=teleport.example.com --user=alice

$ tsh db ls

# Name Description Allowed Users Labels Connect

# ------------------------- ----------- ------------- ------- -------

# example-clickhouse-http [*] env=dev

Create an authenticated proxy tunnel so you can connect to ClickHouse via a GUI

database client, or send a request via curl:

$ tsh proxy db --db-user=alice --tunnel example-clickhouse-http

# Started authenticated tunnel for the Clickhouse (HTTP) database "clickhouse-http" in cluster "teleport.example.com" on 127.0.0.1:59215.

# To avoid port randomization, you can choose the listening port using the --port flag.

#

# Use the following command to connect to the database or to the address above using other database GUI/CLI clients:

# $ curl http://localhost:59215/

To test the connection you can run the following command:

$ echo 'select currentUser();' | curl http://localhost:59215/ --data-binary @-

# alice

To log out of the database and remove credentials:

# Remove credentials for a particular database instance.

$ tsh db logout example-clickhouse-http

# Remove credentials for all database instances.

$ tsh db logout

Log in to Teleport and list the databases you can connect to. You should see the ClickHouse database you enrolled earlier:

$ tsh login --proxy=teleport.example.com --user=alice

$ tsh db ls

# Name Description Allowed Users Labels Connect

# ----------------------- ----------- ------------- ------- -------

# example-clickhouse [*] env=dev

Connect to the database:

$ tsh db connect --db-user=alice example-clickhouse

# ClickHouse client version 22.7.2.1.

# Connecting to localhost:59502 as user default.

# Connected to ClickHouse server version 23.4.2 revision 54462.

#

# 350ddafd1941 :) select 1;

#

# SELECT 1

#

# Query id: 327cfd34-2fec-4e04-a185-79fc840aa5cf

#

# ┌─1─┐

# │ 1 │

# └───┘

# ↓ Progress: 1.00 rows, 1.00 B (208.59 rows/s., 208.59 B/s.) (0.0 CPU, 9.19 KB RAM)

# 1 row in set. Elapsed: 0.005 sec.

#

# 350ddafd1941 :)

To log out of the database and remove credentials:

# Remove credentials for a particular database instance.

$ tsh db logout example-clickhouse

# Remove credentials for all database instances.

$ tsh db logout

Next steps

- Learn how to restrict access to certain users and databases.

- Learn more about dynamic database registration.

- View the High Availability (HA) guide.

- See the YAML configuration reference for updating dynamic resource matchers or static database definitions.

- Take a look at the full CLI reference.