Running Teleport on IBM Cloud

We've created this guide to give customers an overview of how to use Teleport on IBM Cloud. This guide provides a high-level introduction to setting up and running Teleport in production.

We have split this guide into:

Teleport Enterprise Cloud takes care of this setup for you so you can provide secure access to your infrastructure right away.

Get started with a free trial of Teleport Enterprise Cloud.

Teleport on IBM Cloud FAQ

Why would you want to use Teleport with IBM Cloud?

Teleport provides privileged access management for cloud-native infrastructure that doesn’t get in the way. Infosec and systems engineers can secure access to their infrastructure, meet compliance requirements, reduce operational overhead, and have complete visibility into access and behavior.

By using Teleport with IBM you can easily unify all access for both IBM Cloud and Softlayer infrastructure.

Which Services can I use Teleport with?

You can use Teleport for all the services that you would SSH into. This guide is focused on IBM Cloud.

We plan on expanding our guide to eventually include using Teleport with IBM Cloud Kubernetes Service.

IBM Teleport Introduction

This guide will cover how to set up, configure and run Teleport on IBM Cloud.

IBM Services required to run Teleport in High Availability:

- IBM Cloud: Virtual Servers with Instance Groups

- Storage: Database for etcd

- Storage: IBM Cloud Object Storage

- Network Services: Cloud DNS

Other things needed:

We recommend setting up Teleport in High Availability mode (HA). In High Availability mode etcd stores the state of the system and IBM Cloud Storage stores the audit logs.

IBM Cloud: Virtual Servers with Instance Groups

We recommend Gen 2 Cloud IBM Virtual Servers and Auto Scaling

- For Staging and POCs we recommend using

bx2-2x8machines with 2 vCPUs, 4GB RAM, 4 Gbps. - For Production we would recommend

cx2-4x8with 4 vCPUs, 8 GB RAM, 8 Gbps.

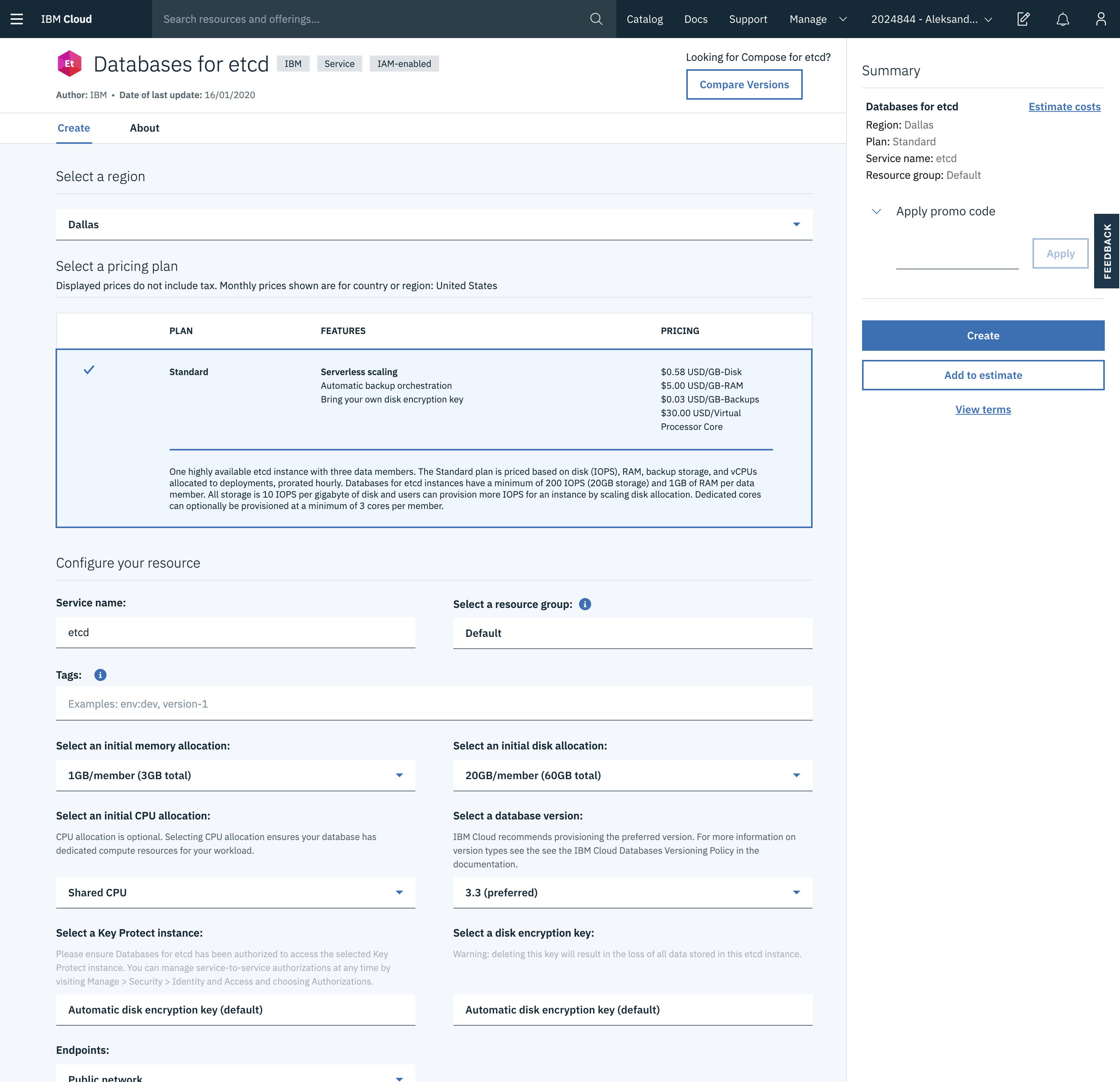

Storage: Database for etcd

IBM offers managed etcd instances. Teleport uses etcd as a scalable database to maintain High Availability and provide graceful restarts. The service has to be turned on from within the IBM Cloud Dashboard.

We recommend picking an etcd instance in the same region as your planned Teleport cluster.

- Deployment region: Same as rest of Teleport Cluster

- Initial Memory allocation: 2GB/member (6GB total)

- Initial disk allocation: 20GB/member (60GB total)

- CPU allocation: Shared

- etcd version: 3.3

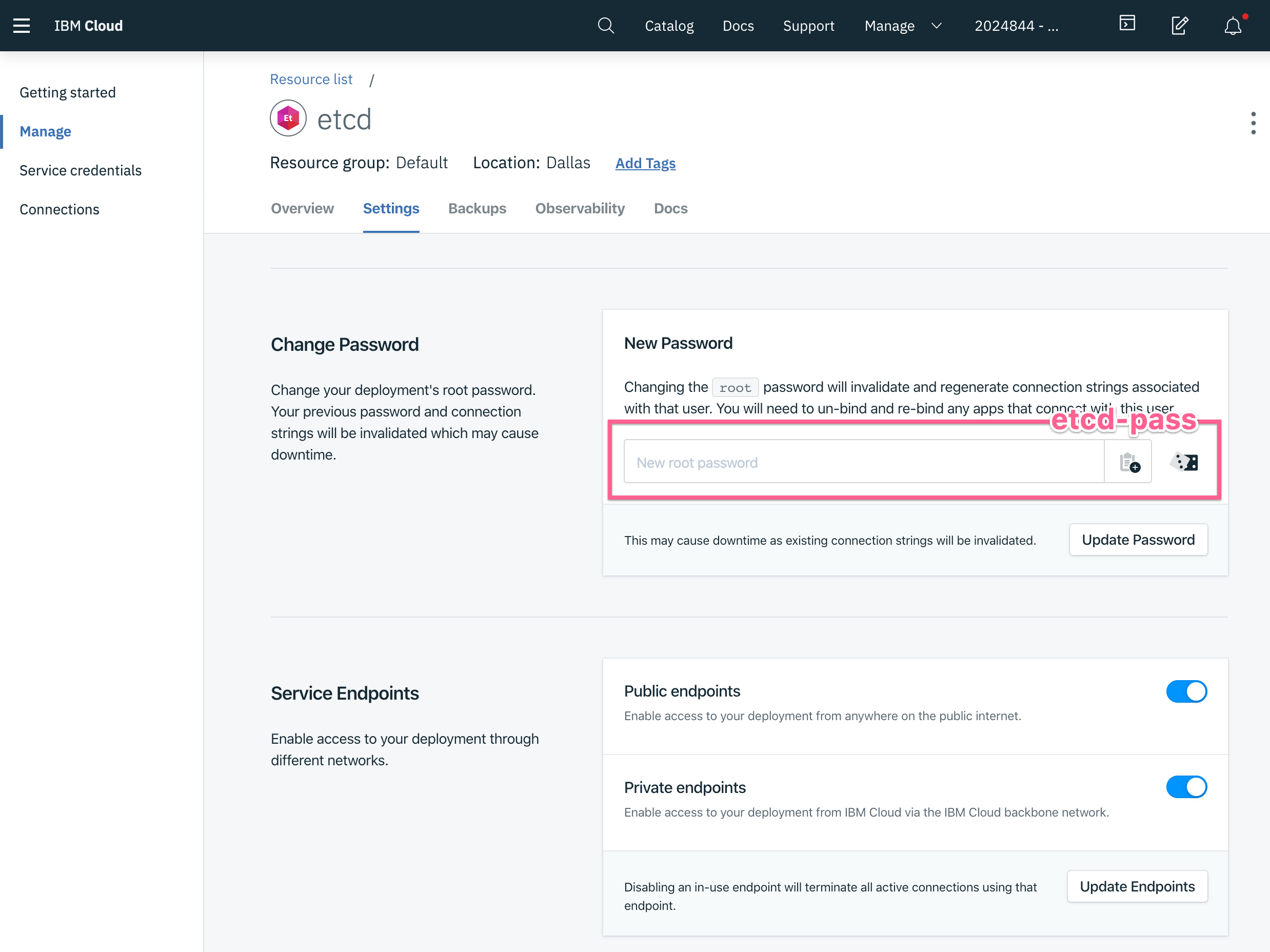

Saving Credentials

teleport:

storage:

type: etcd

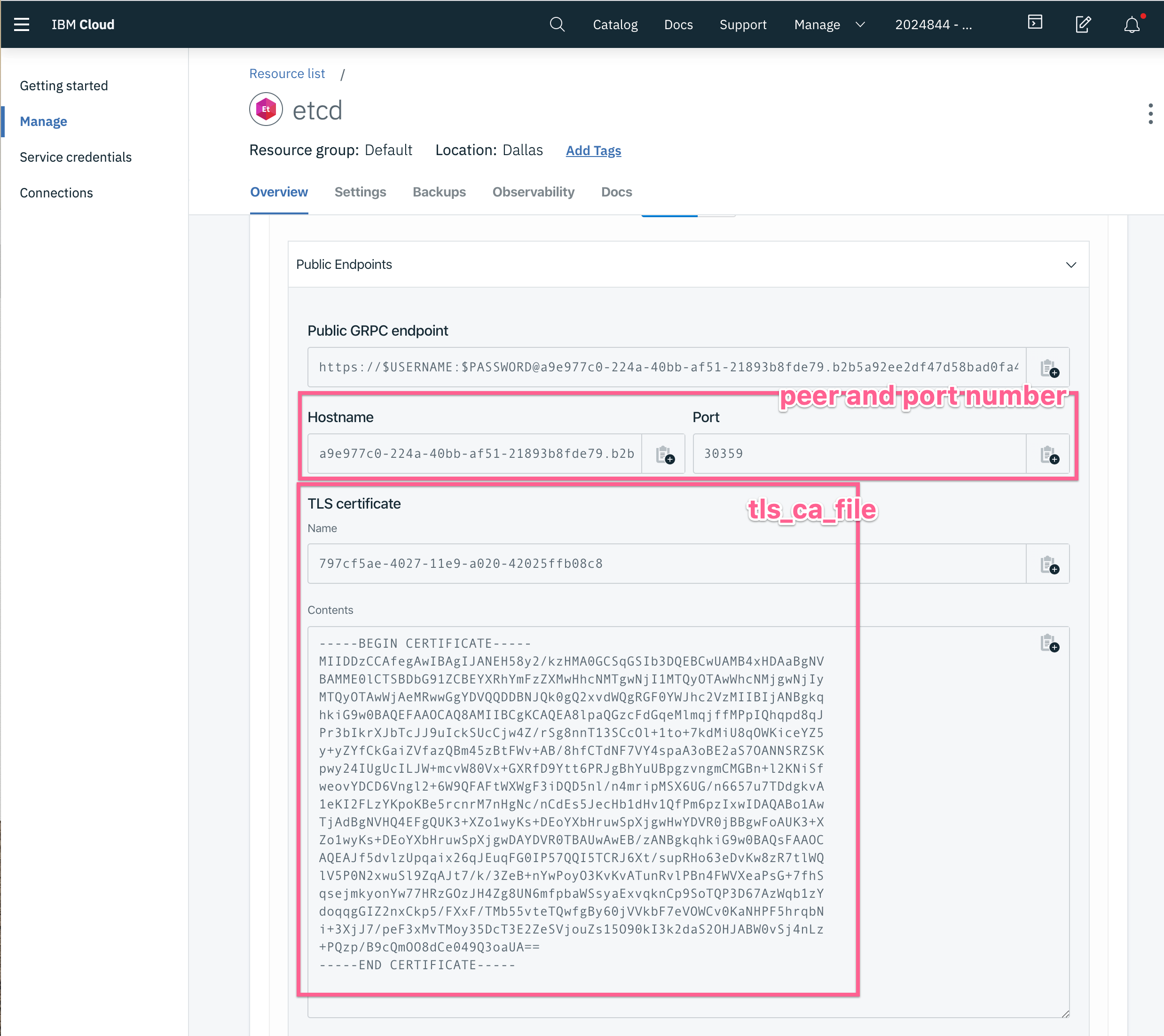

# list of etcd peers to connect to:

# Showing IBM example host and port.

peers: ["https://a9e977c0-224a-40bb-af51-21893b8fde79.b2b5a92ee2df47d58bad0fa448c15585.databases.appdomain.cloud:30359"]

# optional password based authentication

# See https://etcd.io/docs/v3.4.0/op-guide/authentication/ for setting

# up a new user. IBM Defaults to `root`

username: 'root'

# The password file should just contain the password.

password_file: '/var/lib/etcd-pass'

# TLS certificate Name, with file contents from Overview

tls_ca_file: '/var/lib/teleport/797cfsdf23e-4027-11e9-a020-42025ffb08c8.pem'

# etcd key (location) where teleport will be storing its state under.

# make sure it ends with a '/'!

prefix: '/teleport/'

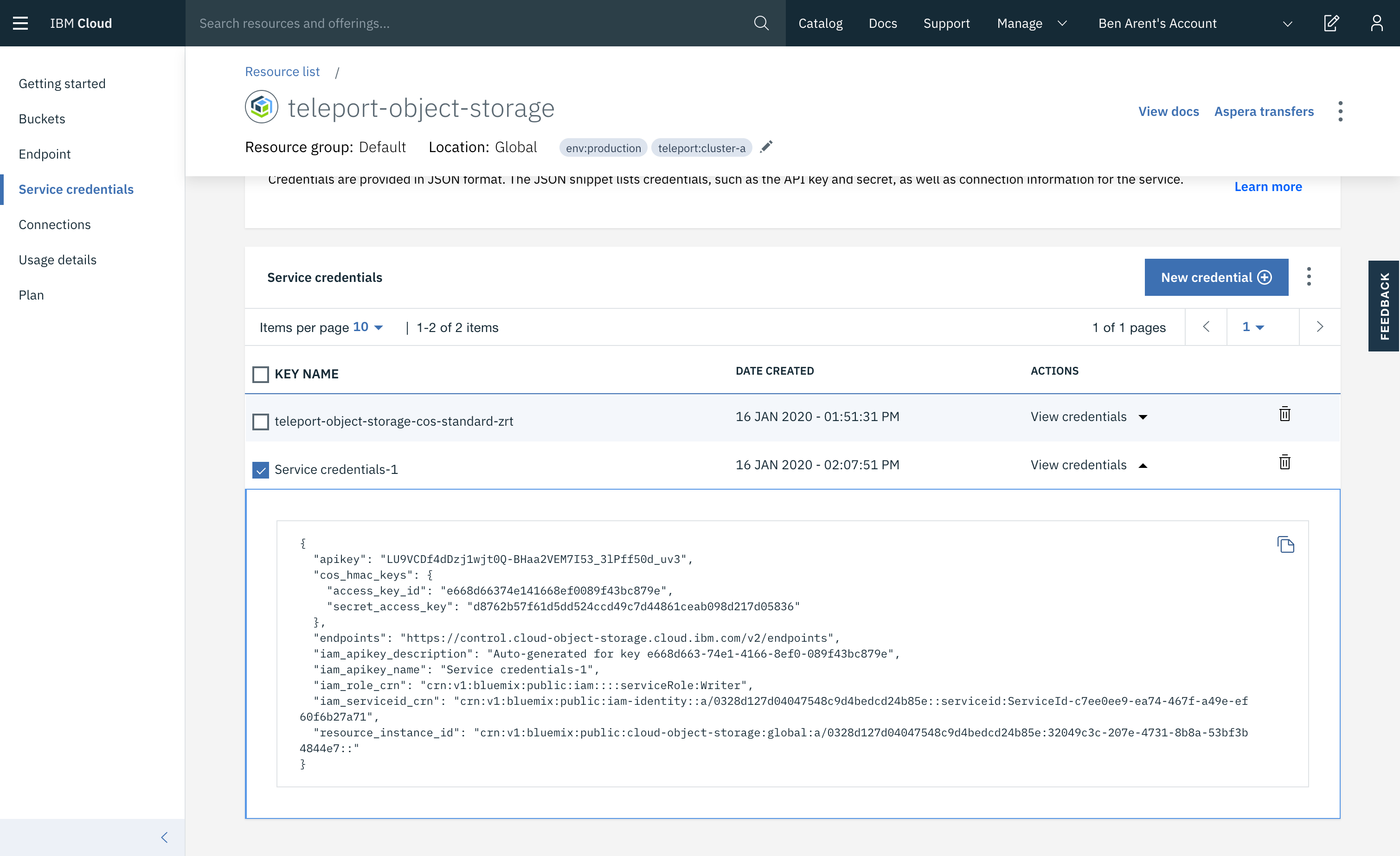

Storage: IBM Cloud Object Storage

We recommend using IBM Cloud Object Storage to store Teleport recorded sessions.

- Create New Object Storage Resource. IBM Catalog - Object Storage Quick Link

- Create a new bucket.

- Set up HMAC Credentials

- Update audit sessions URI:

audit_sessions_uri: 's3://BUCKET-NAME/readonly/records?endpoint=s3.us-east.cloud-object-storage.appdomain.cloud®ion=ibm'

When setting up audit_sessions_uri use s3:// session prefix.

The credentials are used from ~/.aws/credentials and should be created with HMAC option:

{

"apikey": "LU9VCDf4dDzj1wjt0Q-BHaa2VEM7I53_3lPff50d_uv3",

"cos_hmac_keys": {

"access_key_id": "e668d66374e141668ef0089f43bc879e",

"secret_access_key": "d8762b57f61d5dd524ccd49c7d44861ceab098d217d05836"

},

"endpoints": "https://control.cloud-object-storage.cloud.ibm.com/v2/endpoints",

"iam_apikey_description": "Auto-generated for key e668d663-74e1-4166-8ef0-089f43bc879e",

"iam_apikey_name": "Service credentials-1",

"iam_role_crn": "crn:v1:bluemix:public:iam::::serviceRole:Writer",

"iam_serviceid_crn": "crn:v1:bluemix:public:iam-identity::a/0328d127d04047548c9d4bedcd24b85e::serviceid:ServiceId-c7ee0ee9-ea74-467f-a49e-ef60f6b27a71",

"resource_instance_id": "crn:v1:bluemix:public:cloud-object-storage:global:a/0328d127d04047548c9d4bedcd24b85e:32049c3c-207e-4731-8b8a-53bf3b4844e7::"

}

Save these settings to ~/.aws/credentials

# Example keys from example service account to be saved into ~/.aws/credentials

[default]

aws_access_key_id="abcd1234-this-is-an-example"

aws_secret_access_key="zyxw9876-this-is-an-example"

Example /etc/teleport.yaml

...

storage:

...

# Note

#

# endpoint=s3.us-east.cloud-object-storage.appdomain.cloud | This URL will

# differ depending on which region the bucket is created. Use the public

# endpoints.

#

# region=ibm | Should always be set as IBM.

audit_sessions_uri: 's3://BUCKETNAME/readonly/records?endpoint=s3.us-east.cloud-object-storage.appdomain.cloud®ion=ibm'

...

When starting with teleport start --config=/etc/teleport.yaml -d you can confirm that the

bucket has been created.

$ sudo teleport start --config=/etc/teleport.yaml -d

# DEBU [SQLITE] Connected to: file:/var/lib/teleport/proc/sqlite.db?_busy_timeout=10000&_sync=OFF, poll stream period: 1s lite/lite.go:173

# DEBU [SQLITE] Synchronous: 0, busy timeout: 10000 lite/lite.go:220

# DEBU [KEYGEN] SSH cert authority is going to pre-compute 25 keys. native/native.go:104

# DEBU [PROC:1] Using etcd backend. service/service.go:2309

# INFO [S3] Setting up bucket "ben-teleport-test-cos-standard-b98", sessions path "/readonly/records" in region "ibm". s3sessions/s3handler.go:143

# INFO [S3] Setup bucket "ben-teleport-test-cos-standard-b98" completed. duration:356.958618ms s3sessions/s3handler.go:147

Network: IBM Cloud DNS Services

We recommend using IBM Cloud DNS for the Teleport Proxy public address.

# The DNS name the proxy HTTPS endpoint as accessible by cluster users.

# Defaults to the proxy's hostname if not specified. If running multiple

# proxies behind a load balancer, this name must point to the load balancer

# (see public_addr section below)

public_addr: proxy.example.com:3080