Teleport Kubernetes Operator

The Teleport Kubernetes Operator provides a way for Kubernetes users to manage some Teleport resources through Kubernetes, following the Operator Pattern.

The Teleport Kubernetes Operator is deployed alongside its custom resource definitions. Once deployed, users

can use a Kubernetes client like kubectl or their existing CI/CD Kubernetes pipelines to create Teleport

custom resources. The Teleport Kubernetes Operator watches for those resources and does API calls to Teleport to

reach the desired state.

The teleport-cluster Helm chart deploys the Teleport Kubernetes Operator as a sidecar in the auth pods.

The Operator supports multiple auth pod replicas within a single cluster by electing a leader with a Kubernetes lease.

If multiple teleport-cluster releases are using the same backend, only one should have the operator enabled.

Multiple operators from different leases will conflict and lock themselves.

Currently supported Teleport resources are:

- users

- roles

- OIDC connectors

- SAML connectors

- GitHub connectors

- Login Rules

This guide covers how to:

- Install Teleport Operator on Kubernetes.

- Manage Teleport users and roles using Kubernetes.

Prerequisites

- Teleport Community Edition

- Teleport Enterprise

-

A running Teleport cluster. For details on how to set this up, see our Getting Started guide.

-

The

tctladmin tool andtshclient tool version >= 14.3.33.$ tctl version

# Teleport v14.3.33 go1.21

$ tsh version

# Teleport v14.3.33 go1.21See Installation for details.

-

A running Teleport Enterprise cluster. For details on how to set this up, see our Enterprise Getting Started guide.

-

The Enterprise

tctladmin tool andtshclient tool version >= 14.3.33, which you can download by visiting your Teleport account.$ tctl version

# Teleport Enterprise v14.3.33 go1.21

$ tsh version

# Teleport v14.3.33 go1.21

Validate Kubernetes connectivity by running the following command:

$ kubectl cluster-info

# Kubernetes control plane is running at https://127.0.0.1:6443

# CoreDNS is running at https://127.0.0.1:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

# Metrics-server is running at https://127.0.0.1:6443/api/v1/namespaces/kube-system/services/https:metrics-server:https/proxy

Users wanting to experiment locally with the operator can use minikube to start a local Kubernetes cluster:

$ minikube start

Step 1/3. Install teleport-cluster Helm chart with the operator

Set up the Teleport Helm repository.

Allow Helm to install charts that are hosted in the Teleport Helm repository:

$ helm repo add teleport https://charts.releases.teleport.dev

Update the cache of charts from the remote repository so you can upgrade to all available releases:

$ helm repo update

Install the Helm chart for the Teleport Cluster with operator.enabled=true:

- Teleport Community Edition

- Teleport Enterprise

$ helm install teleport-cluster teleport/teleport-cluster \

--create-namespace --namespace teleport-cluster \

--set clusterName=teleport-cluster.teleport-cluster.svc.cluster.local \

--set operator.enabled=true \

--version 14.3.33

Create a namespace for your Teleport cluster resources:

$ kubectl create namespace teleport-cluster

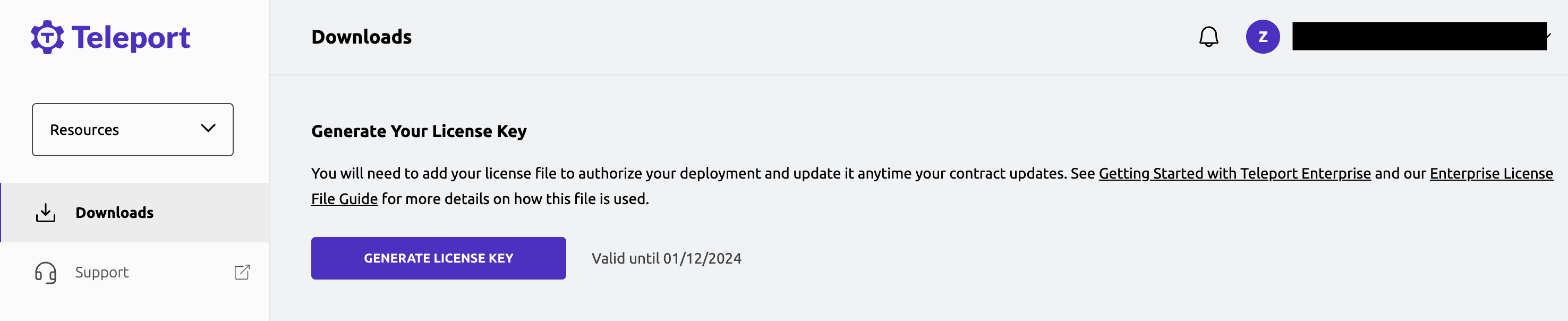

The Teleport Auth Service reads a license file to authenticate your Teleport Enterprise account.

To obtain your license file, navigate to your Teleport

account and enter your

account name (e.g., my-license). After logging in, click

the "DOWNLOAD LICENSE KEY" button to download your

license file.

Create a secret called "license" in the namespace you created:

$ kubectl -n teleport-cluster create secret generic license --from-file=license.pem

Deploy your Teleport cluster and the Teleport Kubernetes Operator:

$ helm install teleport-cluster teleport/teleport-cluster \

--namespace teleport-cluster \

--set enterprise=true \

--set clusterName=teleport-cluster.teleport-cluster.svc.cluster.local \

--set operator.enabled=true \

--version 14.3.33

This command installs the required Kubernetes CRDs and deploys the Teleport Kubernetes Operator next to the Teleport

cluster. All resources (except CRDs, which are cluster-scoped) are created in the teleport-cluster namespace.

All subsequent commands will take place in the teleport-cluster namespace, so let's use it by default:

$ kubectl config set-context --current --namespace teleport-cluster

Step 2/3. Manage Teleport users and roles using kubectl

Create a manifest called teleport-resources.yaml that describes two custom resources: a TeleportUser and a TeleportRole:

apiVersion: resources.teleport.dev/v5

kind: TeleportRole

metadata:

name: myrole

spec:

allow:

rules:

- resources: ['user', 'role']

verbs: ['list','create','read','update','delete']

---

apiVersion: resources.teleport.dev/v2

kind: TeleportUser

metadata:

name: myuser

spec:

roles: ['myrole']

Apply the manifests to the Kubernetes cluster:

$ kubectl apply -f teleport-resources.yaml

List the created Kubernetes resources:

$ kubectl get teleportroles

# NAME AGE

# myrole 10m

$ kubectl get teleportusers

# NAME AGE

# myuser 10m

Check the user myuser has been created in Teleport and has been granted the role myrole:

$ AUTH_POD=$(kubectl get po -l app=teleport-cluster -o jsonpath='{.items[0].metadata.name}')

$ kubectl exec -it "$AUTH_POD" -c teleport -- tctl users ls

# User Roles

# ----------------------------- -----------------------------

# bot-teleport-operator-sidecar bot-teleport-operator-sidecar

# myuser myrole

At this point the Teleport Kubernetes Operator is functional and Teleport users and roles can be managed from Kubernetes.

Step 3/3. Explore the Teleport CRDs

Available fields can be browsed with kubectl explain in a cluster with Teleport CRDs installed.

For example the command:

$ kubectl explain teleportroles.spec

Returns the following fields:

KIND: TeleportRole

VERSION: resources.teleport.dev/v5

RESOURCE: spec <Object>

DESCRIPTION:

Role resource definition v5 from Teleport

FIELDS:

allow <Object>

Allow is the set of conditions evaluated to grant access.

deny <Object>

Deny is the set of conditions evaluated to deny access. Deny takes priority

over allow.

options <Object>

Options is for OpenSSH options like agent forwarding.

Troubleshooting

Resource is not reconciled in Teleport

The Teleport Operator watches for new resources or changes in Kubernetes. When a change happens, it triggers the reconciliation

loop. This loop is in charge of validating the resource, checking if it already exists in Teleport and making calls to

the Teleport API to create/update/delete the resource. The reconciliation loop also adds a status field on the

Kubernetes resource.

If an error happens and the reconciliation loop is not successful, an item in status.conditions will describe what

went wrong. This allows users to diagnose errors by inspecting Kubernetes resources with kubectl:

$ kubectl get teleportusers myuser -o yaml

For example, if a user has been granted a nonexistent role the status will look like:

apiVersion: resources.teleport.dev/v2

kind: TeleportUser

# [...]

status:

conditions:

- lastTransitionTime: "2022-07-25T16:15:52Z"

message: Teleport resource has the Kubernetes origin label.

reason: OriginLabelMatching

status: "True"

type: TeleportResourceOwned

- lastTransitionTime: "2022-07-25T17:08:58Z"

message: 'Teleport returned the error: role my-non-existing-role is not found'

reason: TeleportError

status: "False"

type: SuccessfullyReconciled

Here SuccessfullyReconciled is False and the error is role my-non-existing-role is not found.

If the status is not present or does not give sufficient information to solve the issue, check the operator logs:

$ kubectl logs "$AUTH_POD" -c operator

In case of multi-replica deployments, only one operator instance is running the reconciliation loop. This operator is called the leader and is the only one producing reconciliation logs. The other operator instances are waiting with the following log:

leaderelection.go:248] attempting to acquire leader lease teleport/431e83f4.teleport.dev...

To diagnose reconciliation issues, you will have to inspect all pods to find the one reconciling the resources.

The operator does not see the resource

If you created a resource, and can get it with kubectl get <resource-type>, but

the resource status stays empty and the operator does not produce logs,

check if the resource lives in the teleport-cluster namespace.

The operator only watches for resources in its own namespace.

Cluster instability after enabling the Operator

If enabling the Teleport Kubernetes Operator leads to cluster instability such as degraded proxy state, inability to log into the Teleport cluster,

or loss of access to the Teleport Web UI, check where your Auth Server pods are deployed. All operator sidecars connecting to the same

shared backend must belong to the same teleport-cluster chart release to support the leader election process.

Failing to do so can lead to a degraded Teleport cluster and errors such as:

[AUTH] WARN lock targeting User:"bot-example" is in force: The bot user "bot-teleport-operator-sidecar"

has been locked due to a certificate generation mismatch, possibly indicating a stolen certificate.

In order to resolve this issue, remove any Teleport Kubernetes Operator sidecars connecting to the shared backend that are not in the same Kubernetes cluster or namespace. Then redeploy the Auth Server pods. When the operator starts, it deletes the cached information and recreates all required resources.

Next steps

Helm Chart parameters are documented in the teleport-cluster Helm chart reference.

Check out access controls documentation